In the realm of artificial intelligence, Large Language Models (LLMs) stand as a beacon of innovation, pushing the boundaries of what machines can understand and generate in natural language. This article delves deeper into the technical aspects of LLMs, exploring their architecture, training methods, and the implications of their capabilities. Additionally, we will explore emerging trends in the field, highlighting the future directions of LLMs.

The Architecture of Large Language Models

At the heart of LLMs lies the Transformer architecture, a revolutionary model introduced by Vaswani et al. in 2017. The Transformer model is characterized by its ability to process sequences of data in parallel, making it highly efficient for tasks involving natural language.

arXiv:1706.03762

Encoder-Decoder Structure

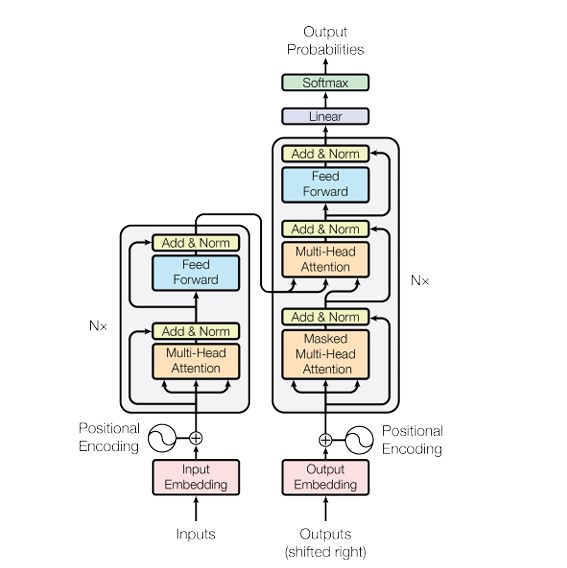

The Transformer model consists of two main components: an encoder and a decoder. The encoder processes the input sequence, converting it into a set of vectors that represent the semantic content of the text. The decoder then uses these vectors to generate the output sequence, such as a translation or a continuation of the text.

The encoder and decoder are composed of multiple layers, each consisting of self-attention and feed-forward neural networks. The self-attention mechanism allows the model to focus on different parts of the input sequence when generating each part of the output sequence, enabling the model to capture long-range dependencies and relationships between tokens.

Self-Attention Mechanism

The self-attention mechanism is a cornerstone of the Transformer architecture. It allows the model to weigh the importance of different parts of the input sequence when generating each part of the output sequence. This mechanism is crucial for understanding the context of a sentence and generating coherent and relevant responses.

The self-attention mechanism works by calculating a score for each token in the input sequence, indicating how much attention should be paid to it when generating each token in the output sequence. These scores are calculated using a dot product between the query, key, and value vectors for each token. The attention scores are then normalized using a softmax function, and the result is a weighted sum of the value vectors, which is used to generate the output tokens.

Positional Encoding

Since the Transformer model processes sequences of data in parallel, it does not inherently understand the order of the tokens in the sequence. To address this, positional encoding is added to the input embeddings. Positional encoding is a set of vectors that represent the position of each token in the sequence. These vectors are added to the input embeddings before they are fed into the self-attention mechanism, allowing the model to understand the order of the tokens and capture the relationships between them.

Feed-Forward Neural Networks

In addition to the self-attention mechanism, each layer in the encoder and decoder also contains a feed-forward neural network. This network consists of two linear transformations with a ReLU activation function in between. The feed-forward network allows the model to learn more complex patterns in the data, enhancing its ability to generate coherent and relevant responses.

Layer Normalization

Layer normalization is applied after the self-attention mechanism and the feed-forward neural network in each layer. This normalization helps to stabilize the training process and reduce the risk of exploding gradients, making the model more robust and easier to train.

arXiv:1706.03762

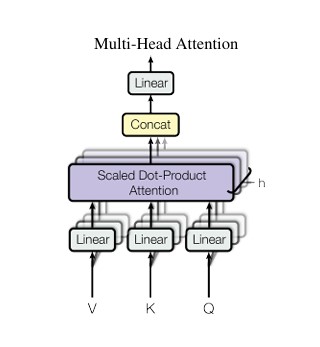

Multi-Head Attention

To capture different types of relationships in the data, the Transformer model uses multi-head attention. This involves applying the self-attention mechanism multiple times in parallel, each time with a different set of learned linear transformations. The outputs of these parallel self-attention mechanisms are then concatenated and linearly transformed to produce the final output. This allows the model to focus on different parts of the input sequence in different ways, enhancing its ability to understand complex patterns and relationships.

Training Large Language Models

Training LLMs involves a process known as self-supervised learning. In this process, the model learns to predict parts of the input sequence based on the surrounding context. This is achieved through two main objectives: masked language modeling and next-sentence prediction.

Masked Language Modeling

Masked language modeling is a foundational training objective for LLMs. In this task, some tokens in the input sequence are randomly masked (or hidden), and the model is asked to predict the masked tokens based on the context provided by the unmasked tokens. This objective is crucial for understanding the context of a sentence and generating coherent and relevant responses.

The masking process involves randomly selecting a percentage of the input tokens and replacing them with a special token (often denoted as [MASK]). The model then attempts to predict the original token based on the context provided by the unmasked tokens. This process encourages the model to learn the statistical patterns and rules of natural language, such as syntax, grammar, and vocabulary.

Next-Sentence Prediction

Next-sentence prediction is another key training objective for LLMs. In this task, the model is given two sentences and is tasked with predicting whether the second sentence is a logical continuation of the first. This objective helps the model understand the relationships between sentences and paragraphs, enabling it to generate coherent and contextually relevant text.

The next-sentence prediction task involves providing the model with two sentences and asking it to predict whether the second sentence is a logical continuation of the first. This is typically achieved by appending a special token (often denoted as [CLS]) to the first sentence and using it as a target for the second sentence. The model learns to predict the [CLS] token based on the content of the first sentence, thereby learning to understand the relationship between sentences.

Training Process

The training process for LLMs involves iteratively adjusting the model’s parameters to minimize the difference between its predictions and the actual data. This is typically achieved using a variant of the stochastic gradient descent (SGD) algorithm, which updates the model’s parameters in the direction that reduces the loss function. The loss function measures the difference between the model’s predictions and the actual data, and the goal of training is to minimize this difference.

During training, the model is fed with large batches of text data, and it learns to predict the next token in a sequence based on the previous tokens. The model’s predictions are compared to the actual next tokens, and the difference (the loss) is used to update the model’s parameters. This process is repeated for many iterations, allowing the model to learn the statistical patterns and rules of natural language.

Fine-Tuning Large Language Models

While self-supervised learning provides a solid foundation, it is often insufficient for specific tasks. To adapt LLMs to perform specialized tasks, such as summarization, translation, or question answering, they are fine-tuned on a smaller, task-specific dataset with supervised learning objectives. Fine-tuning allows LLMs to adjust their general knowledge to the specific requirements of the task, enhancing their performance and accuracy.

Fine-Tuning Process

The fine-tuning process involves training the LLM on a smaller dataset that is relevant to the specific task at hand. This dataset is typically labeled with the correct outputs for the task, and the model is trained to minimize the difference between its predictions and the actual outputs.

During fine-tuning, the model’s parameters are updated to minimize the loss function, which measures the difference between the model’s predictions and the actual outputs. The fine-tuning process is typically faster than the initial training process because the model has already learned the general structure and semantics of natural language, and fine-tuning focuses on adapting this knowledge to the specific task.

Task-Specific Objectives

The objectives for fine-tuning vary depending on the task. For example, in a summarization task, the objective might be to predict a shorter version of a given text that captures the main points. In a translation task, the objective might be to predict the translation of a given text into another language. In a question-answering task, the objective might be to predict the answer to a given question based on a given context.

Fine-tuning allows LLMs to specialize in specific tasks, enabling them to perform these tasks with high accuracy and relevance. By adjusting the model’s parameters to the specific requirements of the task, fine-tuning ensures that the model’s predictions are not only coherent and contextually relevant but also accurate and useful for the task at hand.

Capabilities of Large Language Models

LLMs have a wide range of capabilities, including:

- Text Generation: LLMs can generate human-like text on a wide range of topics, from creative writing to technical documentation.

- Question Answering: They can answer questions based on a given context, demonstrating a deep understanding of the subject matter.

- Translation: LLMs can translate text between different languages, enabling communication across linguistic barriers.

- Summarization: They can summarize long documents, extracting the key points and presenting them in a concise format.

- Sentiment Analysis: LLMs can analyze the sentiment of a text, determining whether it is positive, negative, or neutral.

Applications and Implications of Large Language Models

LLMs have a wide range of applications across various domains, including:

Education

In the education sector, LLMs can revolutionize learning and teaching by providing personalized feedback, tutoring, assessment, and content generation. For example, LLMs can generate summaries of textbooks, quizzes based on lectures, or explanations of complex concepts and formulas. This personalized approach can enhance the learning experience, making education more accessible and effective for students of all ages.

Healthcare

In healthcare, LLMs can assist patients and doctors with diagnosis, treatment, and prevention by providing medical information, advice, and support. For instance, LLMs can analyze symptoms and medical records, suggest medications and therapies, or monitor health conditions and risks. This can lead to more accurate diagnoses, personalized treatment plans, and improved patient outcomes.

Business

For businesses, LLMs can enhance customer service, content creation, and decision-making support. By understanding customer inquiries and feedback, LLMs can provide more accurate and helpful responses, improving customer satisfaction. Additionally, LLMs can generate content for marketing campaigns, social media posts, and product descriptions, saving time and resources for businesses.

Implications

The capabilities of LLMs are transforming the way we interact with technology, offering solutions that are not only efficient but also capable of understanding and responding to human language in a way that closely mimics human intelligence. This has profound implications for various aspects of society, including:

- Increased Accessibility: LLMs can make information and services more accessible to people with disabilities, enabling them to communicate and interact with technology more effectively.

- Improved Efficiency: By automating repetitive tasks and providing personalized assistance, LLMs can increase efficiency in various sectors, from healthcare to customer service.

- Enhanced Personalization: LLMs can offer personalized experiences, whether in education, healthcare, or business, leading to better outcomes and higher satisfaction levels.

Emerging Trends in Large Language Models

As we look to the future, several emerging trends are shaping the landscape of LLMs:

Multimodal Learning

The integration of text, images, and other forms of data is becoming increasingly important, enabling LLMs to understand and generate content across different media types. This trend is particularly relevant in the era of social media, where content often includes images, videos, and other multimedia elements.

Explainable AI

There is a growing demand for models that can provide insights into their decision-making processes, making LLMs more transparent and accountable. This includes developing methods to interpret the outputs of LLMs, helping users understand why a particular response was generated.

Ethics and Fairness

As LLMs become more integrated into society, addressing ethical considerations and ensuring fairness in their applications is becoming a critical focus. This includes developing guidelines and frameworks to ensure that LLMs are used responsibly and do not perpetuate biases present in the training data.

TAKE THE FIRST STEP

Ready to experience the power of Aerolift.AI firsthand? Visit the website to explore their features, compare licensing options, and start your free trial. Discover how Aerolift.AI can revolutionize the way you interact with documents and unlock hidden value within your data.